You need a plan for archiving

You have static data that needs to be archived in a secure way. This has to happen because the data volumes are exploding. EMC has a way to make this happen, within one offering, with one plan.

Not having a plan costs you real money, real customer satisfaction and stops your future development in its tracks!

Challenges ahead

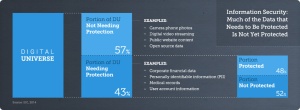

Today, data protection is so much more than just backup. Customer demands drive ever tighter protection needs, all the way through continuous availability. This says that we cannot keep all the data at that level, it’s just not financially viable. It’s not getting any easier, EMC and IDC in cooperation just announced the 7th Digital Universe study, the findings give cause for thought. We will double the size of all data every 24 months,  but there is reason to believe that there will never be double the amount of IT professionals. Also, hands up everyone who thinks their IT budget in two years is twice what it is today.

but there is reason to believe that there will never be double the amount of IT professionals. Also, hands up everyone who thinks their IT budget in two years is twice what it is today.

This calls for a strategy!

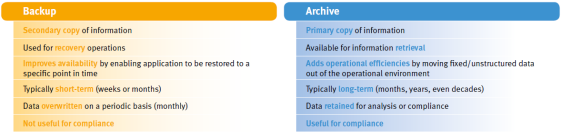

Static data just has to move out of the way and stay protected without having to get copied away over and over again. Backup certainly isn’t the answer here..

In order to strategize, we need information, tactics and visibility.

Information

What  is getting stored, what is it worth,

is getting stored, what is it worth,

who needs to have access to it,

how long should we keep it for?

Tactics

How do we extract the data, where do we put it, how will it be accessed, how will it get protected?

Visibility

Is the data compliant with rules, regulations and laws? How do we search for it? How to track it, retain it, discard it?

How do we search for it? How to track it, retain it, discard it?

Let’s plan!

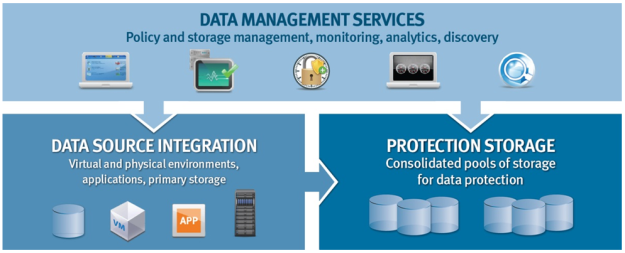

You will need three components to make the strategy a reality:

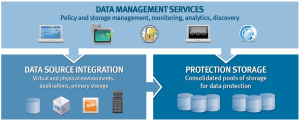

Protection storage

Put the archived content somewhere where it can stay for as long as it needs to, in a pool of storage that reduces costs, heals itself, and guarantees the integrity.

Application integration

No matter where your content comes from, it needs to be analysed, tiered and moved. All the while making sure it is as seemless as possible for your end users.

Data Management

Offer the services out, make sure they are easily consumed and user driven, no matter what the compliance and economic demands are. Easily add value by offering discovery, search and hold to the data that is now in one central archive.

Components of the solution

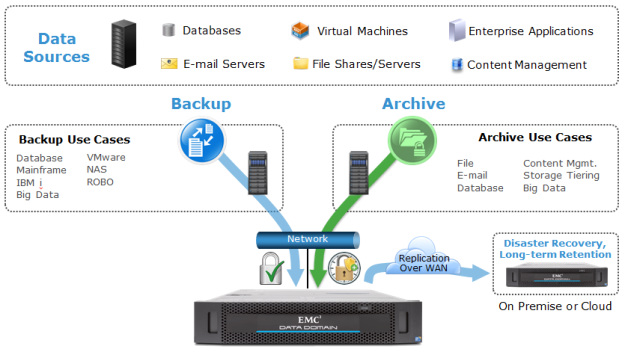

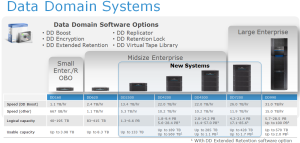

EMC offers a wide range of systems that can assist in being the persistant and central storage for your archive. More and more, we see customers converging archive and backup storage on to DataDomain systems because of a few reasons:

- Deduplication because frequently many copies exist, even in an archive, content is also often redundant within different documents and files. Deduplication allows more content to be stored within a smaller footprint, more economically and with less administration.

- Data Domain Data Invulnerability Architecture assures the data is safe from the initial write, then over time by checking the integrity after reduction, write and periodically after that. Finally content is verified again at the retrieval request.

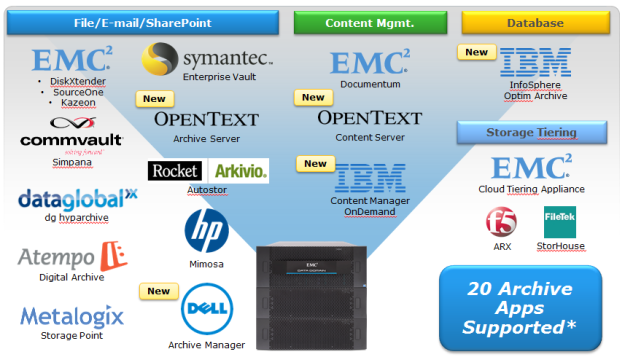

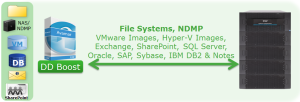

- Data Domain has been designed to support both backup and archive workloads. These multiple use cases are vastly different, but DataDomain can support 100’s of millions of small files, protect them and replicate them. Other demands for archival protection is retention enforcement and possibly encryption, all ticked off by an impressive list of archive application integration, to date over 20 different, a likewise impressive of software options that handle these demands.

The EMC Data Protection Suite for Archive will support your needs for file, email and SharePoint archiving. You can easily add in functions for supervision and discovery as needed. The foundation here is EMC SourceOne which scales from small, single server deployments for teams and small business needs, all the way through vast enterprises with hundreds of thousands of mailboxes, petabyte scale file storage and ginormous Sharepoint farms, EMC SourceOne provides you with the application integration and the visibility required.

Let’s go!

The time to strike is now, data growth isn’t waiting, you need to get situational awareness sooner rather than later. Reach out to an EMC partner of choice or your EMC representative, get an assessment of your situation. There are readily available tools to look in to your email, file servers and Sharepoint data that assesses volumes, age, utilization. This builds a picture for you to build and start executing on a strategy.

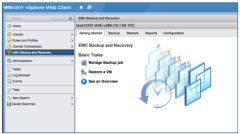

Fundamentally, DBAs are able to easily manage their own backups with their native tools and RMAN scripts, but Networker provides backup catalog synchronization as soon as these RMAN scripts are kicked off.

Fundamentally, DBAs are able to easily manage their own backups with their native tools and RMAN scripts, but Networker provides backup catalog synchronization as soon as these RMAN scripts are kicked off.

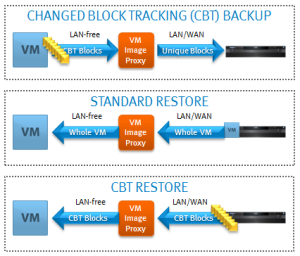

EMC is adding support for Hyper-V 2012 to the already existing Hyper-V 2008 support.

EMC is adding support for Hyper-V 2012 to the already existing Hyper-V 2008 support.

All these new systems are based on Intel Sandy Bridge processors and in some cases provide 4x more performance than the systems they are replacing.

All these new systems are based on Intel Sandy Bridge processors and in some cases provide 4x more performance than the systems they are replacing.